“You’ll know it when you see it.” You could make that case when going through a system selection – EHR, ERP, or other. However, most governance committees will want more quantitative facts to substantiate your selection team’s choice. As a consultancy, we are often asked to weigh in and advise these committees and selection teams on which scoring methods to use and how to deploy them.

The traditional method is to assign numeric points to the criteria, tally them up, and voila! you’ve got your answer. Sounds easy, but remember that your goal is to conduct a process and make a decision, so your selection team should consider multiple factors to ensure your ultimate choice is:

- Objective

- Balanced with representation from multiple perspectives

- Defendable to your governance body

Okay, so you’ve always tried to avoid math; and now you’re faced with scoring. My first piece of advice is not to get too deep into the math nor rely solely on math for your selection.

Much of the differentiation between systems will be revealed during demonstrations and hands-on reviews. The challenge is to translate likes and preferences – typically from users and participants – into objective and quantifiable data that will aid and support decision-making.

Define the overall scoring method

Defining your scoring method early in the process will help you educate, prepare, and galvanize your project team; and it can provide transparency to the bidders. The overall scoring method and major criteria categories can vary but should cover all the categories in your RFP.

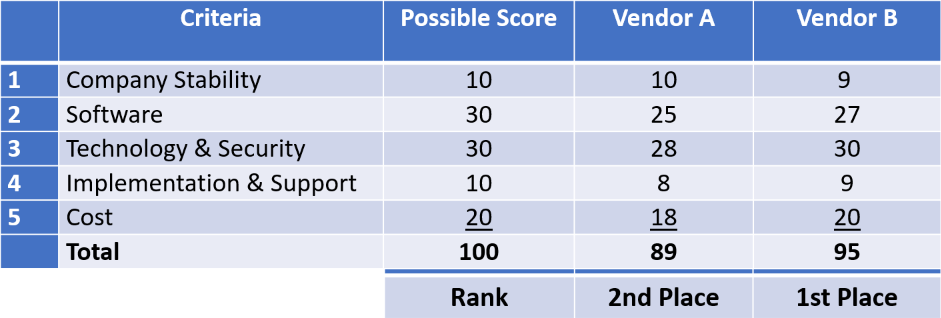

A simple method – which keeps the math easy – is to assign the “perfect score” for each category with a total of 100 as shown in the following example:

So in our example, the high score of 95 is the winner and ranks 1st place. The art of vendor scoring is to determine when and how to apply numeric scores vs. rank. You will have some choices on which scoring method to use during each stage of your selection process – you can use one or both at any point.

Before we get into the methods and stages in the blue section below, here some working definitions:

- Quantitative – is simply counting the points or quantities, e.g. how many “yes” and “no”.

- Semantic differentiation – where a reviewer makes a subjective choice that is translated into a numeric score, typically a 1-5 scale (e.g., “not acceptable” = 1 to “excellent” = 5).

- Ranking – Determining 1st, 2nd, 3rd etc. choices. This can be determined either quantitatively or subjectively, or a combination of both.

SCORING BY SELECTION STAGE

Scoring methods can vary depending on the stage of the selection process. Let’s explore how these methods might apply, recognizing that you have choices on how and when to apply them:

Stage 1: Screening – There are resources, e.g., KLAS, Black Book, etc. that provide reviews on vendors, products and services. So, numeric scoring should not be necessary; a simple report of your findings should be sufficient.

Stage 2: RFP Responses – Scoring and tallying for all the detailed criteria (that’s a lot of line items) within an RFP can be daunting. If you are tallying the scores, my first suggestion is to use a tool such as Excel for the RFP; then you can tally the number of positive and negative responses. If you want to get more sophisticated, you can also have multiple responses (e.g., 3=yes, 2=custom, 1=future, 0=no).

For each category or topic (e.g. an application):

- Tally the perfect score – all positives,

- Tally the response score – # of positives, then

- Calculate [score] / [perfect score] x 100.

Then you can summarize the tallies by major category. Scoring of RFP responses is typically to determine which vendors to invite for demos. So, you likely do not need to do ranking at this point.

Stage 3: Demonstrations – In system selections, demonstrations are the most relevant. This is where users and participants get to score functions, features, and characteristics based upon what they see and hear from the vendor demonstrators and representatives. At this stage, we find it helpful to do both scoring and ranking.

- Scoring. Historically, we prepared paper scoring sheets by application and function, so that for each item (or group of items) the participating reviewer circles a score using semantic differentiation: 1=Not Acceptable, 2=Poor, 3=Acceptable, 4=Good, 5=Excellent. But today there are convenient electronic tools (e.g., Microsoft Forms) for scoring submission and tallying. For each group, such as nursing, lab, technology, etc., simply take an average score based upon the scores submitted. But remember to have team leaders do validation sessions with their group. One problem with the traditional numeric tally is that reviewers (typically after demos) may have difficulty with or submit incomplete scoring. If that occurs, use the numeric scores as a baseline, augment them with a team huddle, and rank the team’s choices. This is especially useful when multiple software applications are involved.

- Ranking. Ranking helps the reviewers relate to their preferences by determining choices (e.g., 1st, 2nd, 3rd, etc.). Based upon the huddle, the team can rank the vendors as 1st, 2nd, 3rd, etc. choices. Then you can total the number of 1st, 2nd, 3rd, etc. from each of the teams for each vendor. Translate the rankings back to the score: for example with a 30 possible points for software: 1st place = 30, 2nd= 25, 3rd = 20.

Stage 4: Due Diligence – I generally do not see the need for separate scoring during due diligence activities such as reference checks and site visits. I suggest simply adjusting scores if there are notable issues – positive or negative – that your group identifies.

Verify and Socialize the Scores

When you get to the “system of choice” again we have found that ranking seems more relevant, and the numeric scores become more supporting information. Socializing what each team thinks is equally or more important to the process. For each team or sub-team, I like to augment the rankings and scores with the three key reasons for the rankings or scores. The rankings and reasons can be socialized in a larger review forum – similar to a town meeting where a spokesperson can say “we the [… e.g. lab team] rank vendor A as the first choice for the following reasons […]”.

The outcome of this discussion should lead the group to a conclusion and recommended system of choice ranked 1st, 2nd, 3rd, etc. The process will lean on the scoring methods but most importantly focus on the decision and not the math.

If you need a helping hand, our experts at HealthNET Consulting are available to assist. Just contact us to learn more about the System Selection services we provide.

Blog Post Author:

Clifton Jay, CEO

HealthNET Consulting

Clif founded HealthNET in 1990, and it is his vision that has created the long-term partnerships we have with many of our clients. With 30+ years of experience in healthcare IT and operations management, he continues to be a creative leader as HealthNET assists clients with strategies, tactics and optimizing their use of information technology. Clif received his BS and MS in Industrial Engineering/Operations Research from Syracuse University, attended graduate studies at MIT Sloan School of Management, and is a candidate for professional engineering registration. He is a past-president of HIMSS New England Chapter, and a HFMA member. Prior to founding HealthNET, he held practice director and vice president positions for national healthcare consulting firms and was vice president for the Massachusetts Hospital Association consulting subsidiary. HealthNET employees know Clif as the “go to” for approaching challenges with a thoughtful and unique perspective. In addition to his leadership responsibilities, Clif manages to find time to pursue his other interests as a musician, tennis player, angler, and car enthusiast.